Allowing solutions unattainable with direct numerical simulation and offering far greater accuracy than conventional engineering methods, large eddy simulation (LES) is now widely used for studying complex thermophysics in propulsion and power systems. However, because LES demands extremely complex tools, predictive LES has been problematic, leading to sometimes ambiguous results and suboptimal use of expensive computing time. Moreover, quantifying LES accuracy and troubleshooting problems can be difficult and time-consuming due to the many possible sources of error, including the interdependence of different sub-grid-scale models, competition between modeling and numerical errors, model variability, and numerical implementation.

To increase predictive LES accuracy, the CRF’s Guilhem Lacaze is leading a research team that includes Joe Oefelein (who serves as mentor for the project) and Anthony Ruiz. Together, these researchers are creating a comprehensive metrics framework to quantify LES quality and accuracy, while accounting for the interdependencies that can affect accuracy and time to solution.

Tackling the Sources of Error

Now well into the project, the team has identified three major categories of error—discretization, numerical methods, and models—and is concentrating on solidifying solutions.

Discretization

The first source of error stems from an inability to optimally control a simulation’s level of granularity (or discretization). To numerically solve a physical problem, the physical environment of interest is discretized, divided in small unit volumes (also called cells). At the cell level, the initial complex mathematical equations representing the physical problem are converted into much simpler conservation rules—a procedure that makes the whole problem solvable by a supercomputer.

In general, accuracy increases as cell volume decreases—but simulation costs increase as the number of small cells grows. Managing costs may call for less-than-adequate discretization, which in turn may lead to errors. Currently, only highly empirical rules-of-thumb are available to adjust computational refinement, and the criteria derived to date for fine-tuning accuracy are either not general enough or nonrealistic.

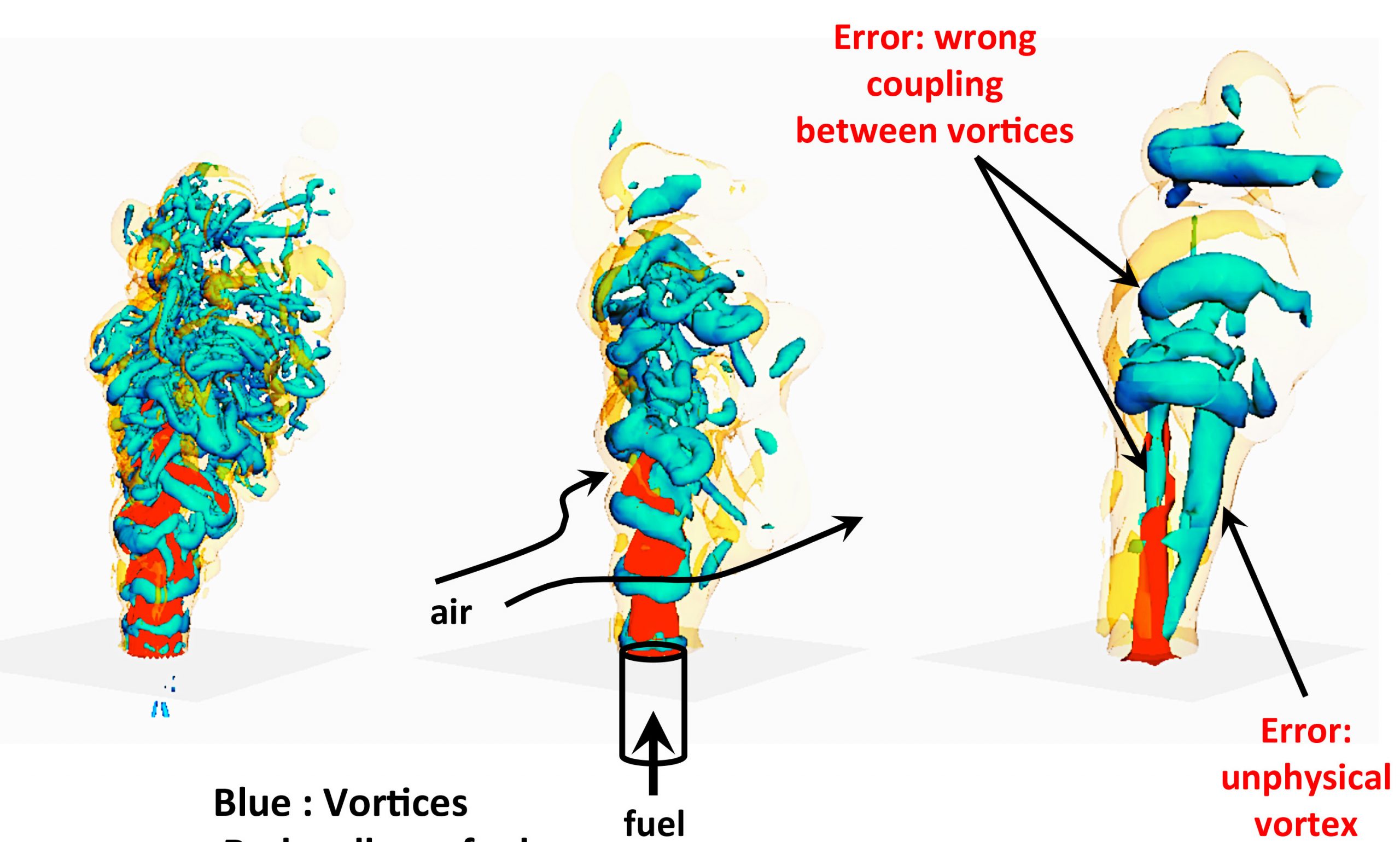

“This project has demonstrated that discretization errors can modify how the physics of a simulated flow are presented, leading to incorrect physical interpretations and delays in developing solutions for transportation and other fields,” said Guilhem. To address this issue, Guilhem’s group is working on a set of numerical tools that provide the size of the important structures (vortices, flames, fuel clouds) that must be captured in a simulation. Based on assessments from these tools, simulators can easily define the optimal granularity, maximizing accuracy and minimizing simulation cost and setup time.

Numerical Methods

The numerical methods needed to resolve flows with strong gradients—such as the liquid-gas interfaces found in most engines, which feature sharply changing species composition, temperature, and density—represent the second source of error. Because classical numerical methods produce errors in the presence of steep variations, stabilization methods are employed to avoid unphysical results. However, these stabilization methods’ dissipative properties also locally decrease the precision of the solver—the software used to solve mathematical problems. To preserve accuracy, the project team developed a stabilization approach that operates very locally and only when numerical problems are detected.

Models

The final problem this project addressed relates to the complex thermodynamic models used to simulate high-pressure flows in engines. Because these models were not developed for use in the solvers needed for LES, they can, under certain conditions, create aberrant results that are inconsistent with physical reality. “You can see spikes in the data that go to infinity, which we know is impossible,” said Guilhem.

To solve this problem, Guilhem has developed software that provides accurate and physical thermodynamic properties of flow. Those properties are precomputed and constrained to stay in their respective physical ranges. Further, the tool automatically detects singularities (or spikes), and its accuracy increases locally to ensure precision and stability. “This stand-alone tool, which will be available globally, has the potential to significantly improve simulation precision, at a very attractive computational cost," Guilhem said.

Promising Preliminary Outcomes

The team is encouraged by the project’s promising preliminary outcomes, which could help reverse an expensive trend. “We estimate that only about 20% of a typical simulation’s computational time is productive,” said Guilhem. “Our goal is to provide practical guidelines and affordable tools for both research and industry. If successful, these tools will heighten confidence in LES results and reduce the time and cost needed to prepare, compute, assess, and validate simulations.”