What We Do

The Resilient EXtreme Scale Scientific Simulations (REXSSS) team develops methods to increase the resiliency of scientific simulations to system faults, such as node crashes or silent data corruptions (SDCs) from bit-flips. Most of our work so far has focused on resilient solvers for elliptic partial differential equations (PDEs) using very scalable task-based implementations.

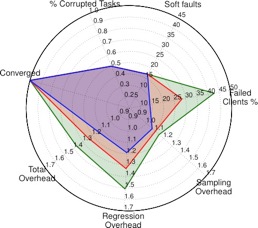

Figure 2 This radar plot shows the behavior of our resilient elliptic PDE solver when subjected to a fixed number of SDCs, and three different levels of hard faults (each shown by different color) simulated in the form of clients crashing. The plot shows the percentage of corrupted tasks, number of soft faults (SDCs), percentage of failed clients, associated overhead to maintain resilience compared to a case with no faults, and a Boolean value identifying convergence. Even for very high fault rates (almost 50% of client nodes crashing and 15 SDCs), the algorithm continues to allow the PDE solver to reach the proper solution. [1]

Purpose

As computer architectures move towards the exascale level, they will include many more components than do today’s machines, and all of these components will operate at a lower voltage to reduce energy consumption. These factors combined are likely to push exascale machines to much higher fault rates than exhibited by today’s machines. To perform meaningful amounts of work on such architectures, scientific simulations need to be resilient to system faults and capable of scaling to millions of parallel threads. Without this resilience and the scaling capability, combustion, climate, and other types of simulations will not fully benefit from the computational power provided by exascale architectures.

Key Contributions

- Developed a method to filter out the effect of SDC without the need to explicitly detect the occurrence of a fault

- Reformulated the solution of PDEs into a task-based formulation

- For this task-based algorithm, implemented a server-client framework that demonstrated both strong and weak scaling above 90% for 2D elliptic PDEs tested on 115,000 cores on the Edison supercomputer at the National Energy Research Scientific Computing Center (NERSC)

- Rely on the User Level Fault Mitigation (ULFM) extension to MPI to allow client nodes to go down without interrupting the solver execution

Technical Details

Making effective use of the evolving exascale hardware presents significant challenges. While the precise nature of exascale architectures is still unknown, some features can be anticipated. For example, the architecture will involve a large number of nodes, with large intra-node concurrency. Further, the overall number of components (such as memory chips, CPUs, and accelerator cards) is expected to grow, but each node will operate at lower voltage than do today’s nodes to meet energy consumption requirements.

Combined, these attributes will result in a higher frequency of hard faults (nodes crashing), as well as of SDCs, due to random bit flips.

Error correction may mitigate such faults, but the correction process will lead to asynchronicity in the execution of operations. Further, error correction will require more expensive hardware (in terms of performance, energy, and money), and may fail in the event of concurrent bit flips. To fully benefit from exascale architectures, applications will need to have very strong fine- and coarse-grained concurrency to scale well; be very tolerant of asynchronicity in communications; and be resilient to both hard faults and SDCs.

To reach this goal, our research group has developed a PDE solver that relies on a domain decomposition approach to achieve both scalability and resilience. The method is a generalization of an accelerated additive Schwarz preconditioner. The PDE is first solved on subdomains for a set of sampled values of the subdomain boundary conditions. These solutions are then used to infer maps that relate the solution at each subdomain boundary point to the solution at the boundary of neighboring subdomains. These maps form a system of algebraic equations that can be solved to obtain the solution of the global PDE at each of the subdomain boundaries. We also extended this approach to allow for parametric uncertainty in the PDEs by adding a stochastic dimension to the boundary-to-boundary maps, resulting in a hybrid-intrusive approach for uncertain PDEs.

The algorithm naturally lends itself to a parallel implementation. Each of the subdomain solves is a task that is executed in a server-client framework. In the current implementation, the servers hold the solution state and are assumed to be free of hard faults and SDCs. The clients are stateless and request tasks from the servers, execute them, and send the results back. When clients crash, the server merely hands out tasks to the remaining clients, which gives the algorithm resilience to client hard faults. To protect against SDCs, a regression approach that is robust to outliers is used to infer the boundary-to-boundary maps from the sampled subdomain solutions. Note that by relaxing the resilience requirements for the client nodes, those nodes can potentially run at lower voltages (and associated higher fault rates) to save energy without affecting the fidelity of the overall simulation.

So far, this approach has been tested on 2D elliptic PDEs with very promising results. Scalability tests using up to 115,000 cores on Edison at NERSC showed weak and strong scaling efficiencies above 90%. The algorithm is resilient to SDCs in the form of single and multiple bit-flips in the client solves, returning the exact answer even under very high fault rates. Using a ULFM-MPI implementation, we also demonstrated resilience to hard faults on the clients by terminating their MPI ranks.

Note that this framework acts as a preconditioner in the sense that it wraps around existing numerical discretizations of PDEs on each subdomain. As such, our framework can complement other solver developments to achieve scalability and resilience. Further, it is not meant to replace existing solvers, but to enable their use in an extreme scale environment where hard faults and SDCs are frequent.

- Bert Debusschere

- Karla Morris

- Francesco Rizzi

- Khachik Sargsyan

[1] Francesco Rizzi, Karla Morris, Khachik Sargsyan, Paul Mycek, Cosmin Safta, Olivier Le Maître, Omar Knio, and Bert Debusschere, “ULFM-MPI Implementation of a Resilient Task-Based Partial Differential Equations Preconditioner”, 6th Workshop on Fault-Tolerance for HPC at eXtreme Scale (FTXS 2016), at The 25th International ACM Symposium on High Performance Distributed Computing (HPDC 2016) Kyoto, Japan on May 31 June 4, 2016

[2] K.Morris, F. Rizzi, K. Sargsyan, K. Dahlgren, P. Mycek, C. Safta, O. Le Maître, O.M. Knio, and B.J. Debusschere, “Scalability of Partial Differential Equations Preconditioner Resilient to Soft and Hard Faults”, ISC High Performance, Frankfurt, Germany, Jun 19-23, 2016

[3] F. Rizzi, K. Morris, K. Sargsyan, P. Mycek, C. Safta, O. Le Maître, O.M. Knio, and B.J. Debusschere, “Exploring the Interplay of Resilience and Energy Consumption for a Task-Based Partial Differential Equations Preconditioner”, PP4REE 2016 – Workshop on Parallel Programming for Resilience and Energy Efficiency at the Principles and Practice of Parallel Programming 2016 (PPOPP16) conference, Barcelona, Spain, March 12-16, 2016

[4] F. Rizzi, K. Morris, K. Sargsyan, C. Safta, P. Mycek, O. Le Maître, O. Knio and B. Debusschere, “Partial Differential Equations Preconditioner Resilient to Soft and Hard Faults”, FTS 2015, First International Workshop on Fault Tolerant Systems, IEEE Cluster 2015, Chicago, IL, Sep 8, 2015

[5] K. Sargsyan, F. Rizzi, P. Mycek, C. Safta, K. Morris, H. Najm, O. Le Maître, O. Knio, and B. Debusschere, “Fault Resilient Domain Decomposition Preconditioner for PDEs”, SIAM Journal on Scientific Computing, 37(5), A2317–A2345